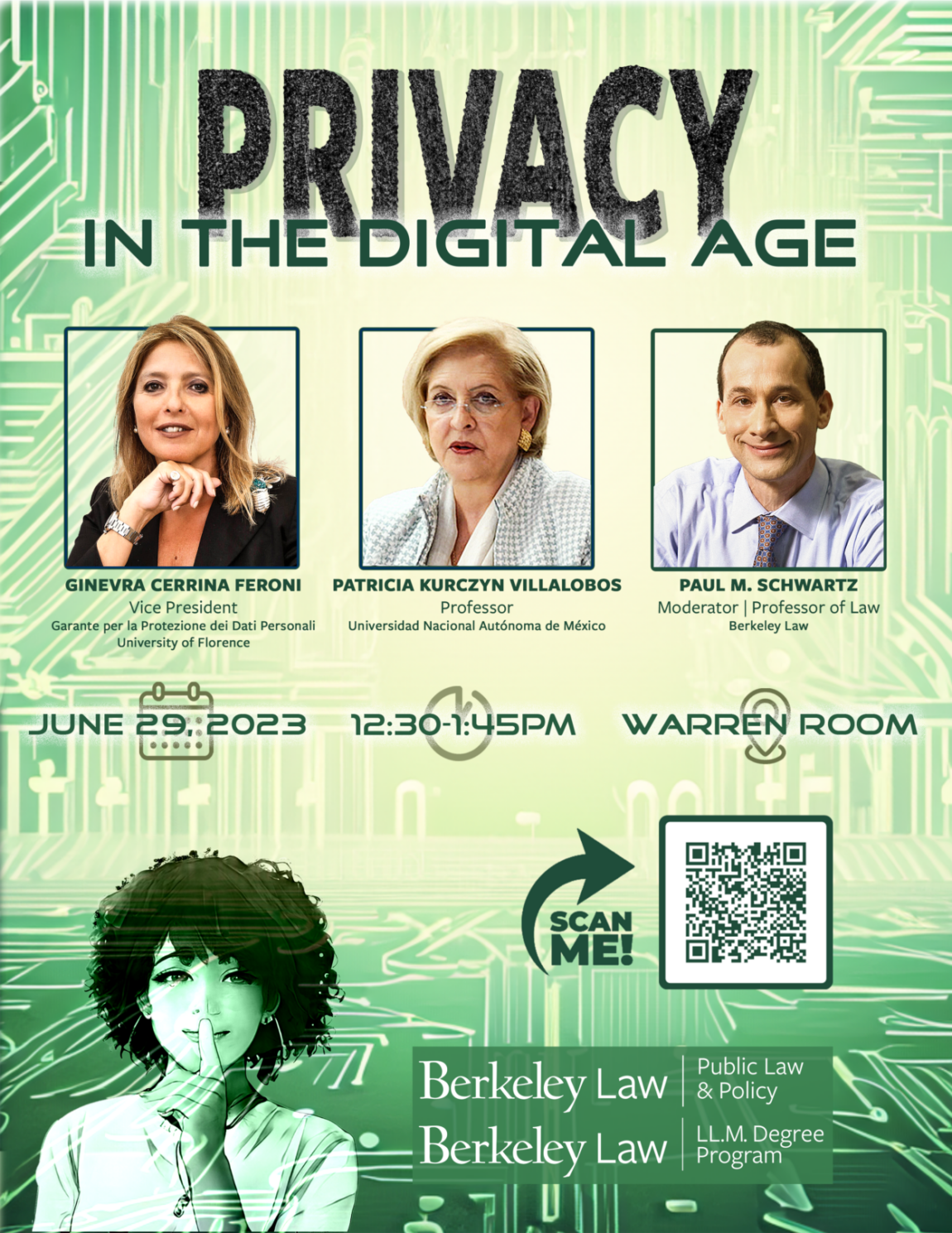

PRIVACY IN THE DIGITAL AGE

Thursday, June 29, 2023 | Warren Room | Berkeley Law

Event Video

Event Description

Professor Paul Schwartz will hold a moderated discussion with Professor Ginevra Cerrina Feroni, the Vice President of the Italian Data Protection Authority (Garante per la Protezione dei Dati Personali). Prof. Cerrina Feroni is one of the most important scholars and lawyers in Europe working on issues of electronic data and privacy today. She is Full Professor of European and Comparative Constitutional Law at the University of Florence. Prof. Cerrina Feroni is also a practicing lawyer, a member of the scientific board of several academic journals in the area of comparative public law, and a serial columnist of several national newspapers. She has been often nominated to hold posts in governative and parliamentary committees and working groups for constitutional and administrative matters.

Panelists

Professor Cerrina Feroni is one of the most important scholars and lawyers in Europe working on issues of electronic data and privacy today. She is full Professor of European and Comparative Constitutional Law at the University of Florence. Prof. Cerrina Feroni is also a practicing lawyer, a member of the scientific board of several academic journals in the area of comparative public law, and a serial columnist of several national newspapers. She has been often nominated to hold posts in governative and parliamentary committees and working groups for constitutional and administrative matters.

Paul M. Schwartz, Professor of Law at UC Berkeley School of Law, is a leading international expert on information privacy, copyright, telecommunications and information law. He has published widely on these topics. In the US, his articles and essays have appeared in periodicals such as the Harvard Law Review, Yale Law Journal, Stanford Law Review, Columbia Law Review, Michigan Law Review, and N.Y.U. Law Review. His co-authored books include Data Privacy Law (1996, supp. 1998) and Data Protection Law and On-line Services: Regulatory Responses (1998), a study carried out for the Commission of the European Union that examines emerging issues in Internet privacy in four European countries. Professor Schwartz has provided advice and testimony to numerous governmental bodies in the United States and Europe. During 2002-2003, he was in residence as a Berlin Prize Fellow at the American Academy in Berlin and as a Transatlantic Fellow at the German Marshall Fund in Brussels. He has also acted as an advisor to the Commission of the European Union on privacy issues.

Event Flyer

[Music]

STEVEN HAYWARD: So, normally, Prof. Yoo would be introducing the lunchtime panel, but of course whenever there’s a big day in the news and the court, as there is today, he’s a television star – as you all know. I’m Steven Hayward, a fellow of the Public Law and Policy Program and fellow of The Institute of Governmental Studies, down in Political Science.

Um, I’ll just introduce my perspective on the panel in the following way: For those of you from overseas, there’s an American saying – it may well be the same saying overseas, I don’t know – that goes: just because you’re paranoid, doesn’t mean they’re not out to get you. And I was reading recently, because this infamous person died about two weeks ago, I was reading the famous manifesto of the so-called Unabomber. And if you ever read about it – it was an attack on technology. And there’s a very bracing part in the middle – this is obviously a, you know, a tortured soul with rage and issues, as we would say – but he was very smart and he has a passage that’s written in the 1990s, very recent. He says what’s going to happen someday when advances in computer technology – and he doesn’t say artificial intelligence, but it’s what he means – what happens then when big institutions and governments start taking our private data without even our knowledge. And that’s on all of our minds today, and is one of the topics we’re taking up with this panel.

Patricia Villalobos was not able to make it due to some travel problems that seem to be endemic right now, so our principal speaker will be Genevra – Ginevra – do that wrong – Cerrina Feroni. She is a professor at the University of Florence, one of the preeminent experts on privacy law and related issues in Europe.

And to moderate or curate the discussion is our own Paul Schwartz, from here at Berkeley Law, who is one of America’s foremost experts on these emerging issues.

PAUL SCHWARTZ: Welcome, everybody. I’m really looking forward to this discussion with Professor Feroni about data privacy law. I’m so happy you were able to join us from Italy and, um, and today and we’ll be able to tell us about activities there by the Italian Data Protection Commission. I’d also like to thank John Yoo for setting up this international event and so I’ll now turn the floor over to Professor Feroni.

GINEVRA CERRINA FERONI: So, Good morning to everybody and first of all, I’d like to thank Professor John Yoo, uh, for this very, uh, welcome invitation and for his warm hospitality and friendship. And I’m deeply honored to be – to be here to speak at such a prestigious university and in front of such distinguished colleagues as Professor Schwartz – is a pillar of data protection discipline in the world. And my greetings and thanks also to Professor Raffiotta for his important role in creating and increasing a close scientific and cultural relationship between our university. And I will try with the question of Professor Schwartz to bring my point of view, uh, which is that of Professor of Italian and Comparative Constitutional Law and pro-tempore as a vice president of the Italian data protection authority, in these three years, because our mandated seven years – we have been elected by the Italian parliament in July 2020.

PAUL SCHWARTZ: But do you have any other initial comments or?

GINEVRA CERRINA FERONI: Um, just to – to thanks and to say that the privacy in the digital age is a very challenged topic, so I don’t know if we can, uh, if you can tackle this topic, privacy in the digital age. There are many, many, many, many, issues many, many, problems, many big impacts from the technology on the democratic society.

PAUL SCHWARTZ: Thank you! So, for my first question I’d like to ask you about artificial intelligence and ChatGPT. So, March 2023 – not that long ago – the Italian Data Protection Commission made worldwide headlines by ordering a stop to ChatGPT in Italy due to privacy concerns. Thereby, Italy became the first western nation to place any limits on ChatGPT. Among its concerns was an absence of any legal basis for ChatGPT that justified quote the massive collection and storage of personal data, end quote, to train the chatbot – and of course in European data Protection Law there’s a firm requirement that data processing cannot take place without a legal basis. The Italian data protection commission also stated that OpenAI, which is the company running uh ChatGPT, that OpenAI failed to check the age of chatGPT’s users, which were in theory supposed to be age 13 and above; and, of course here too, the GDPR has firm requirements about protecting minors from collection and personal data. Well, what happened next, one month later, April 2023, the ban on ChatGPT was lifted. OpenAI responded to the concerns of the Italian data protection Commission. It said it would provide greater visibility regarding its privacy policies and it offered a user consent opt-out form. ChatGPT also agreed to provide a new form for European Union users that would allow them to exercise their rights to object to their use of personal data to train the AI models. So, my question – my first question for Professor Feroni, now that we have this background, is if you have any thoughts about how the discussion about the regulation of AI and privacy will proceed in the EU?

GINEVRA CERRINA FERONI: Yes, I think that it could be interesting for this audience to have an overview of the history of chatGPT; and, I have to underline that the investigation is on – ongoing. This is the reason why I will not [go] into the details, but on the affair, but I will try to summarize some points and some future perspectives. As Professor Schwartz correctly mentioned in the measure adopted – last March we observed, as Authority, the lack of information provided to users and all parties concerned regarding the data processes by OpenAI. Furthermore, we observed the absence of a legal basis justifying the massive collection and storage of personal data for the purpose of algorithm training, uh underline the platform functioning. And it also came to light that the data provided by ChatGPT’s responses were inaccurate. Um, sometimes fanciful and sometimes completely wrong. And therefore dangerous for personal identity and also for reputation. And last but not least, despite the service according to the terms published by the American company, we intended for users aged 13 and above, we highlighted how the absence of any verific – age verification filter, age verification system, exposed minors to responses that were entirely inappropriate for the level of development and self-awareness. And this significant concerns led us to impose temporary limitation on the processing of data for Italian users. And after our interim measures the company requested a meeting with the authority, which we granted, and in that meeting OpenAI confirmed their strong willingness to collaborate with the authority with the aim of reaching a positive resolution regarding the concern raised about ChatGPT end of being compliant with with GDPR.

Our deadline given to a ChatGPT was strict 30 days, and we asked for concrete measures information Provisions, legal basis exercise of right protection of minors, and an undertaking to promote an informative campaign to educate individuals about the use of their personal data and OpenAI is indeed made improvements that have enabled ChatGPT to be accessible to Italian users once again and considerate the measure adopted by ChatGPT, by OpenAI, we ordered the temporary limitation to be suspended. This is in summary the story of our measure and on the merits, OpenAI has introduced information and transparency on its website privacy notice for all users, both in Europe and worldwide, to explain how personal data is processed for algorithm training and to emphasize the right to object that processing. And then they granted the individuals living in Europe, including non-user, the right to object to the processing of the personal data for algorithm training through a dedicated form online – uh that now is easier, accessible, and the right to deletion, enable individuals to request the deletion of inaccurate information because at the moment they currently state they’re technically enabled to correct – uh the output – so we can delay it, we can ask for deleting. And also for minors, they added a bottom um requiring the Declaration of the age as over 18, uh or over 13 with parental consent to access to the service. I also included a date of birth field in the registration form. Um, so in to conclude, we express – we expressed in satisfaction with the measure taken by OpenAI, and we hope that open a will comply with further request outlined um we expect that OpenAI implements in in age verification system, a strong age verification system, uh and we are awareness this is a very complicated because it’s regard not only for the OpenAI, but every platform has the same – and the same problem, and then the a massive communication campaign for the Italian users. And I suppose that it will be presented in few days a few days in in Italy.

And [the] last things, these important things, the European Data Protection Board is the authority composed of the president of each national authority of data protection, has – uh – created a task force, a dedicated task force to foster cooperation and exchange information and document on possible enforcement action conducted by the – by the Authority. So, we have now, also the umbrella cover from Europe and other countries after Italy have opened investigation also against the OpenAI; and, this decision – uh, it has been very strong – uh it has been uh very famous in the world, and I to be honest I was impressed by the critical reactions we we received in Italy immediately afterwards. Not – not in other country, but in Italy we received some critical opinion, then the climate completely changed and this has been a strong decision. My opinion is that the Merit of this decision indirectly it has been, uh of raising of issue of generative artificial intelligence and its impact on the people lives. And a debate has finally open up, uh also at political level, uh not a – a topic for seminar for congress, [nor] for just for University, but also at political level, and a debate is become a popular debate probably our decision has been exceeded our expectations. And topic – the topic has become a subject of General discussion. This is in my opinions, the the most important thing after after, um, our judgment and – but you asked me the future and this is the, this is a very challenging topic. Which the – the future of AI and the future of AI regulation, and probably you know that in Europe we are under European regulation, European Parliament. European Parliament has approved maybe 10 days ago, or two weeks ago – no more, a proposal – a proposal on AI acts. And the point that I’d like to underline concerns the meaning axiological and political of the choice of the European Union uh uh in terms of methods and then married in terms of method the recourse at the to a regulation and basing it uncertain essential principle seems to me extremely significant, because it’s the first organic regulation in the world uh on AI uh or artificial intelligence and the choice in favor of regulation after many white white white papers, many guidelines, ethical principle – this is a prescriptive regulation and it’s – it’s very important.

The choice in favor of Regulation is decisive in a context of a frequent tendency towards the regulations that ends up delegating to the law of the market the definition of perimeter of Rights and Freedoms; and it’s a choice that characterize the entire European – European digital policy, of course I refer to GDPR, but they are also a more recent, uh, more recent regulation data governance act, Digital Services act, digital markets act, data act under discussion. Uh, and um it expresses in my opinion the ambition of Europe to play a role, a crucial role, uh not so much in the ownership of technological assets – this is not a tradition of Europe, but in that of regulatory governance, a leading role in a regulatory governance and the choice adopted in Europe of a regulatory framework is a flexible on Solutions but strict on principle and values.Uh and also it’s expressed in many General clauses after all this subject matter is characterized by transversality and Rapid change that requiring uh a future proof regulation. So we introduce a regulation of principle because the – the – the – the subject matter is in Rapid change and also susceptible to be to the best integration with internal systems. This is for method, methodology, methodologically in terms of merit, the structure of the European proposals is a – a risk-based approach. This is a core this – is a core of the new proposal of Europe, risk-based approach. A sort you can imagine a sort of pyramid of severity with correlative impact assessment.

There are low risk treatment, medium risk treatment, high risk treatment and prohibited uses of AI. For example, it the proposal prohibits the use of AI systems linked to subliminal technique capable of conditioning the behaviors of others, or of exploiting vulnerable – vulnerabilities of social group – of social groups. Um, another provision[is that] it prohibits also social scoring systems, the social scoring system based on the monitoring of individual conduct or on biometric identification in real time for law enforcement purposes. Uh, obviously there are exemptions when it’s necessary for specifically declare the imperative public needs, but with judicial or administrative authorization and – and I would not also that GDPR was already anticipated by the GDPR – GDPR is the, the acronym of General data protection regulation enshrined in article 22. It’s very very important. Uh, the right to explanation the explainable algorithm this is one of – one of them, the right to human review of the automated decision. The right to not be subjected to exclusively algorithm decision with discriminatory effects.

The goal is clear, uh um in the – in the purpose of Europe making technology a tool for promoting freedoms and not discrimination uh uh in this aspect I observe that this architecture of AI is a good choice, the regulation must be implemented. Of course there are many uh many areas to implement to well understand, but in general, the architecture is – is a good choice. Um, there is a crucial point uh to conclude a crucial point that must be seen and is the AI governance. Um the draft approved by the European Parliament, in fact, [do] not clearly define the competents Authority on the competent National Authority and the governance of the AI but [they] leave the decision to each member state. Uh – uh each member state has to establish one or more National supervisory authorities and the question of which authority should manage will – should manage the Practical aspect of artificial intelligence remains a very delicate point. Um, why is [this] a delicate point? Because of course AI is a trans and interceptual team and there is a-a proposal to splitting a competencies among various authorities this could be in a path, uh, obviously is a choice understandable and also legitimate choice however could risk leading the to diversification of responses resulting in different rules and levels of oversight from sector to sector.

Another proposal could be an information sharing -sharing and information sharing system between the involved authorities. Uh, also this-this solution could uh could re could have some risk if it no well Planet being inadequate for the purpose. Um, another one proposal that emerged from debate is the creation of a specific digital rights Authority. A new one. Digital Rights Authority, however it has [a] critical aspect considering that fundamental rights are not a new subject even though they extend into the digital dimension. In any case, and this is a discretion of parliament of course, in any case we cannot disregard the central role of data protection authorities at the national level in overall strategic decision and sector-specific regulation. Uh, this is both for the contribution of expertise, they could offer they both public and private sector and to data controllers and to ensure justiceability of rights and interest-based on well established practices and in this topic on artificial intelligence also before ChatGPT. The Authority, the-the data protection authorities in Europe, have taken [an] important position regarding the application of AI system systems in universities for remote exams in the workplace. We-we had an important decision on food delivery for example, or for anti-invasion purposes, for web reputation, for preventive medicine, So the-the data protection authority has a no-how in this in this field in this sector of AI of course generative AI is another things more dangerous and more unknown than the other one. This is in general, my overview of this-of this topic of the future of AI.

PAUL SCHWARTZ: Thank you so much Professor Feroni. That was masterful and I appreciate that you’ve touched on both the regulation of AI at the Italian level, and also [at] the EU development; and, it’s really striking uh from your comments, you know. One initial reflection I would have is just how quickly the EU and Italian regulators have really pivoted into this area to come up with what we can already see is going to be a structure or framework of the densest most thorough regulation of AI in the world. Um, so what I thought we would do now is um turn to the Italian data protection uh commission and um in particular talk about how its powers have evolved and what from your perspective, other than ChatGPT, have been the most important activities of the commission recently. So the Italian data protection commission was established in 1997 pursuant to the then applicable data protection act. That means it’s been operating for over a quarter century, and its powers include reporting to the Parliament and to the government on new developments that affect privacy, handling citizen complaints, and issuing opinions. So, in your view how have the powers and activities of the commission evolved,and what have been its most important activities recently?

GINEVRA CERRINA FERONI: Yes, thank you for this-this question. And, 25 years is in effect – a significant time to take – to take stock for our history. And, I’d like to say that the action of guarantee, uh, is not only a company but often anticipated or stimulated the social, economic, cultural, and institutional changes in our country. It has been an Administration structurally devoted to the Future, and since the very beginning to the service of individuals, uh today in the digital Dimension as rich in opportunities as in-in risks. And, I don’t like rhetoric, and therefore I will not say that it has been an epic story, it’s a story of a small Authority now about 160 people but we hope that in few weeks we will arrive [to] 200. It’s a small, easy story of a small Authority committed to working to protect Italian citizens from the imbalance of the new forms of power, from coercive devices, from unwanted-unwanted and aggressive intrusions into the most intimate lives. It’s not epic because no one claims glory for a job they do with seriousness, and spirit of service. But it has certainly been agreed, and in some respects exciting, [to be] adventure. And, The Authority was born in 1997 as you mentioned in a changing world. The institution itself has the modern form of an independent administrative authority; a strange body that derogates from the categories-traditional categories of reprinted representativeness in favor of technical knowledge and precise independence. That independence that is invoked, practiced, and demonstrated, claimed it’s essential.

It’s vital, I would say, given its task and power. Independent from-from government, from the government and the market, from the economic blackmail, from the group pressure, independent, and an administrative authority; therefore, which although it could seem the manifestation of a delegation from the democratic principle, is at the same time an inversion of it guaranteeing impartial action and aimed at the exclusive protection of the individual. And independence is a very strong quality. It’s difficult to stay in an Independence Way, because during an emergency it is not easy to be independent. And our history, maybe we can go by keywords because there is not a long history, but 25 full of uh decisions-uh by the opinions full of history and keywords in chronological origin. And, Europe’s relationship with Europe International terrorism, economic crisis, GDPR Revolution, and digital pandemic. This is for-for keywords to understand some steps, some passages, origins, and Europe.

The Authority was born in a fertile jungle in the midst of technological filament, in both the public and private sectors. Uh public and private investment in digitization are the offspring of a precise, uh, strategy used by Europe. I remember [they] commissioned the law as the child of that strategy. Uh, European strategy, the most European of all independent authorities, and in Europe it finds in fact its ideal seat of comparison. A real Vanguard in the process of Europeanization of Europe is therefore the natural operating context. Then, planetary interconnection upsets the traditional scenario of International Communication. No longer databases but cross-border flows represent a physiological dimension of data, and this is the digital globalization. Uh, the extreme stage of the intensification of commercial economy, commercial exchange, and International Investment on global scale. Uh, the immediate effect of which is an ever closer interdependence on, might [you] say, symbiotics-symbiotic between Europe, the great consumer of technology, and America, the great producer of technology. A transaction that takes place in exchange for personal data will use technological tools, and we pay in data. This is a transaction.

Second Point, International terrorism and economic crisis, uh-um, It’s precisely from the United States that the news arrives, designed to change the course of history – our way of life, September 11 re-proposes the most terrible and perhaps all this balancing tests that [have] between collective security and the safeguard of individual rights. And, the Italian Authority has supervised, with many complex decisions, the processing carried out by the police forces – in an attempt to find the point of balance between the needs of prevention, investigation, prosecution, and repression of crimes. And the right to the protection of personal data as a safeguard of personal freedom, and dignity, and a few hours later blacks another black swan the Great Recession of 2008. A python with a disruptive impact on society capable of profoundly changing its approaches to spending and saving. Banks have always been a privileged laboratory for experimentation, experimenting with Innovative data, data processing, and this is why the grant which its decision on the subject has demanded high standards of fairness and transparency with regard [to] the customer and especially at times of systemic crisis. And the same precautions have always been demanded by the-the grant also from The Tax Administration, in the adoption of stringent security measures to safeguard the data of what is the country’s most important information asset.

Um, the third point is GDPR, uh, GDPR is the real turning point. Uh, approved in 2016 but entered into fourths in 2000- 2018. The poll of accountability of compliance assessment shifts to the data controllers, uh, no longer formal prescriptions to observe, but accountability, uh, this is a magic word, accountability in the new regulation. It’s in-it’s the individual data controller who must adopt the organizational measure and security, uh, technique that are perfectly suited to minimize the risk represented, by his or particular processing and context, like a tailor-like-like a tailor does when sewing a B Spot Suite. A task that is anything but simple, of course, in terms-in terms of organization, uh, economically impactful, especially for Italy, because our reality is, uh, full of small and very small companies. And so this has been an impact, economical impact, to be compliant with GDPR.

And, the fourth point is a digital pandemic. It has been a very very strong stress test-the test of the regulation came immediately unexpected with this emergency, and right from the start the pandemic was characterized in Italy but in every-in every part of the World by being completely inscribed in a digital dimension. Uh and our Authority was literally inundated, uh, with thousands, uh, thousands of files of dossier, uh, the increase in emergency work that became a daily occurrence required a commitment, even before the regulatory one, to understanding what was happening in the country. And several were the open fronts, uh, on the one hand confronting for example private initiative, uh, sometimes improvised aim to maintaining productivity, employment, uh, and in some way helpness in the workplace. And a place open to the public, I remember, I’m thinking of, for example, measuring of body temperature. It was the first measure, uh, in the-in the-in the-in the week after March 2000 and 2020. Uh on the other hand, to deal with the cabinets with the government in the management of contagion, uh, and it has been a big discussion, big debate, for example about the contact tracing apps to-to control, uh, the-the contact tracing of persons, of people, and third in this scenario, and within this scenario to ensure as far as possible, uh, unitary centralized management which would not disperse the processing of data relating to citizens health.

In a patchwork of a national, regional, local health data ownership, and the issues was enormous from Green certificates in their various versions, to distance learning, smart working, to vaccines, vaccine plan never has in the course of the pandemic, has been realized how much the right to personal data protection really is at the heart of the protection of the individual and Collective rights of citizen in a current liberal democratic system. In those intense months, the role of the grant as an independent supervisor of political choices has been expressed in almost daily, technical, and legal advisor activity. It is not to be in a simple exercise, and it has not been trivial because it’s not easy and it’s not trivial to exercise, as I said, independence, especially in the moment of extreme emergency. However, in my opinion it’s precisely in the moment of the of extreme emergency that the core of fundamental rights must be guaranteed and defended in order to constitute a safeguard against any possible authoritarian decision-making drift. It’ss simple, it’s easy in that moment to eliminate the the guarantees of fundamental right and-and here opens the great notes I would say crucial today to the artificial intelligence we have already mentioned, uh, in many-many sectors.

So, to conclude, uh-um, we are in the-in the midst uh of a [inaudible] transition and if we don’t want protections and rights to dissolve we need to find a new look consistent with a new word to build a new policy; and the new Geopolitics for the informal spaces of the net we must identify the relevant actors, behind titles and forms, recognize the actual power relations around us, everything has changed and today if to not change to we will not survive-not survive to the impact of technology. And what will remain firm is our only Benchmark, our campus, the men at the center-at the center of the chronological ecosystem-ecosystem that we certainly do not deny, because we could never deny the reason why we operate and exist of course, but the man at the center is not a empty word. It’s a humano-centric vision, uh the man at the center, not just the consumer, but the individual with is all private immaterial spiritual life, and here in the option between the man and the Machine we see and we always see, um, an inalienable priority. This is our goal, um, we-we need, uh, we need in Italy. But, I suppose that the problem is common, we need a great work of digital education and data protection culture at all levels from the youngest generation. And I speak to this young audience, so a very very important-a digital education and data protection culture at all levels from the youngest generation to elderly ones. Uh, and uh, our, um, challenge is trying to make people understand that the right to privacy in the digital age has become even more than before a huge issue of constitutional law because it has to do with public and private power and with the rights and freedom, uh, freedoms of citizens. That it’s with democracy, which is the antithesis of the state of surveillance, and power today is exercise digitally in the fact of holding everything that person hold most precious that is his her data, that is his very identity. So controlling data means controlling information about health, political orientation, sexual orientation, financial situation, history and [unintelligible] and entire life. And, constitutionalism was born precisely to place limits on power, this is the core of constitutionalism. Uh if one does not understand the context of reference and continues to place the issue of privacy among the well-known stereotypes, privacy is useless. Privacy is only a bureaucratic obstacle. Privacy does not interest me because I have nothing. Twice there are many many stereotypes one risks losing the deeper sense of the impact of technological in democratic society. So that is why it’s important never to let our guard down and to convey the correct message about what data protection really means, and what the meaning of the action of data protection authorities is; and this is in a nutshell our-our mission.

PAUL SCHWARTZ: Oh, that’s wonderful. Thank you, Professor Feroni. I I think what will do because this brings us just to the time. I think we’re going to stop now. If you have questions uh for Professor Feroni, please just come up and we can continue that way I don’t want to hold people, and let’s all thank her for a wonderful presentation.

[Applause]

[Music]